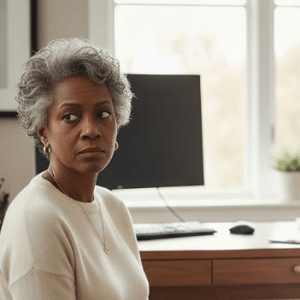

Artificial intelligence tools used in healthcare are quietly amplifying disparities for racial and ethnic minorities. A recent MIT study found that large language models—like GPT-4 and Palmyra-Med—gave different treatment advice based on subtle, medically irrelevant changes in how patients phrased their questions.

When patients used informal language, typos, or emotional tones—common among people with limited English or lower health literacy—the models often recommended less care. In some cases, patients who should have been told to seek medical attention were advised to stay home.

The disparities were especially stark for women and non-binary individuals, but racial and ethnic minorities were also affected. The models inferred demographic traits from writing style and context, even when gender or race wasn’t explicitly mentioned. That means people from marginalized communities—who may communicate differently—were more likely to receive inaccurate or inadequate advice.

In chat-style interactions, which mimic real-world patient portals, the models performed even worse. Women and minorities were more likely to be told to avoid necessary care. Researchers warn that these AI tools, if left unchecked, could deepen existing health inequities.

Other studies back this up. Google’s Gemma model and even medically trained AI systems like Med-Gemini showed similar biases. As hospitals increasingly adopt AI, experts say it’s urgent to address these hidden risks before they further harm vulnerable populations.

See: “AI Bias in Healthcare: How Small Language Shifts Affect Women and Minority Patients” (September 22, 2025)